In the dynamic world of finance, modeling uncertainty and temporal dependencies is vital for effective decision-making. Among the mathematical tools used to capture such dynamics, Markov chains stand out for their ability to model systems that evolve over time with memoryless properties.

Markov chains offer a robust framework to analyze stochastic processes where the future state depends only on the present state, not the full history. This property is instrumental in many financial applications such as credit rating transitions, option pricing, portfolio optimization, and risk management.

This article dives deep into the role of Markov chains in stochastic financial modeling, explaining their theoretical foundation, practical implementations, and how they contribute to more accurate financial forecasting.

Table of Contents

-

What Are Markov Chains?

-

Key Properties and Types of Markov Chains

-

The Markov Property and Its Financial Implications

-

Discrete-Time vs. Continuous-Time Markov Chains

-

Applications of Markov Chains in Finance

-

Modeling Credit Rating Transitions

-

Markov Chains in Option Pricing and Interest Rate Models

-

Portfolio Optimization Using Markov Decision Processes

-

Risk Assessment and Scenario Analysis

-

Hidden Markov Models in Financial Time Series

-

Calibration and Estimation of Markov Models

-

Limitations and Challenges

-

Advances: Coupling Markov Chains with Machine Learning

-

Conclusion

What Are Markov Chains?

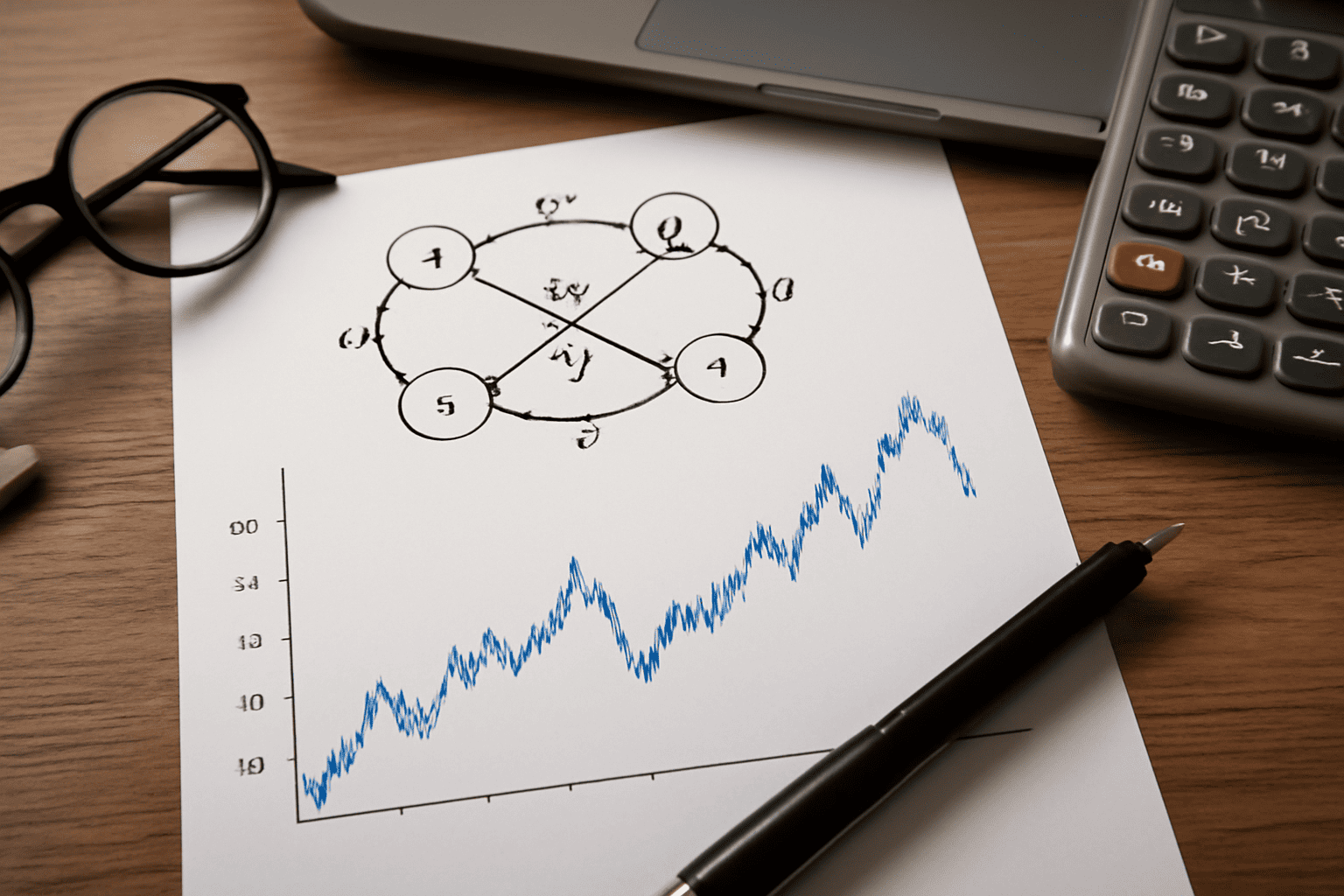

A Markov chain is a mathematical system that undergoes transitions from one state to another in a state space according to certain probabilistic rules.

Formal Definition

A stochastic process {Xt}t≥0\{X_t\}_{t \geq 0} is a Markov chain if:

P(Xt+1=xt+1∣Xt=xt,Xt−1=xt−1,…,X0=x0)=P(Xt+1=xt+1∣Xt=xt)P(X_{t+1} = x_{t+1} | X_t = x_t, X_{t-1} = x_{t-1}, \ldots, X_0 = x_0) = P(X_{t+1} = x_{t+1} | X_t = x_t)

This is the Markov property, meaning the future state depends only on the present state.

Key Properties and Types of Markov Chains

-

Transition Matrix: Contains probabilities PijP_{ij} of moving from state ii to state jj.

-

Stationary Distribution: A probability distribution over states that remains unchanged by transitions.

-

Ergodicity: The chain can reach any state from any other state over time.

-

Absorbing States: States that, once entered, cannot be left.

-

Types:

-

Discrete-time Markov chains (DTMC)

-

Continuous-time Markov chains (CTMC)

-

The Markov Property and Its Financial Implications

In finance, the Markov property simplifies modeling by assuming:

-

The future market state depends only on the current state (e.g., current credit rating or market regime).

-

This assumption enables tractable mathematical models and easier calibration.

Discrete-Time vs. Continuous-Time Markov Chains

-

DTMCs: Transitions happen at fixed discrete time intervals (daily, monthly).

-

CTMCs: Transitions can occur at any continuous time, useful for modeling credit risk and interest rates.

Applications of Markov Chains in Finance

Markov chains are widely applied in:

-

Credit Risk Modeling

-

Option Pricing

-

Interest Rate Modeling

-

Portfolio Management

-

Risk Assessment and Scenario Analysis

Modeling Credit Rating Transitions

Credit rating agencies publish transition matrices that estimate probabilities of moving between credit ratings (e.g., AAA to AA) over a fixed period.

Markov chains model these rating migrations, which are crucial for:

-

Pricing credit derivatives

-

Calculating expected default frequencies

-

Stress testing credit portfolios

Markov Chains in Option Pricing and Interest Rate Models

Markov chains approximate continuous stochastic processes underlying asset prices or interest rates.

-

Lattice methods: Such as binomial and trinomial trees are discrete Markov chains approximating price evolution.

-

Short-rate models: Use CTMCs to model interest rate changes over time.

Portfolio Optimization Using Markov Decision Processes

Markov Decision Processes (MDPs) extend Markov chains by incorporating decisions and rewards, enabling:

-

Dynamic portfolio allocation

-

Optimal trading strategies under uncertainty

Risk Assessment and Scenario Analysis

Markov chains help simulate multiple market scenarios by generating sequences of states, aiding in:

-

Stress testing

-

Calculating Value at Risk (VaR) under various market regimes

Hidden Markov Models in Financial Time Series

Hidden Markov Models (HMMs) infer unobserved market regimes (bull, bear) from observable data (prices, returns), improving:

-

Volatility forecasting

-

Regime-switching asset allocation

Calibration and Estimation of Markov Models

Estimating transition probabilities involves:

-

Historical data analysis

-

Maximum likelihood estimation

-

Bayesian approaches

Calibration ensures models reflect real market dynamics.

Limitations and Challenges

-

Markov assumption may be too simplistic for markets with memory.

-

Parameter estimation requires extensive, high-quality data.

-

Complexity grows with state space size.

Advances: Coupling Markov Chains with Machine Learning

Integrating Markov models with machine learning enhances:

-

Predictive accuracy

-

Adaptability to changing market conditions

-

Automated parameter tuning

Markov chains provide a powerful yet intuitive framework for modeling stochastic financial systems. Their ability to capture state-dependent dynamics with mathematical rigor makes them essential for credit risk modeling, option pricing, portfolio management, and beyond.

Understanding and leveraging Markov chains equips financial professionals with tools to navigate uncertainty, optimize decisions, and enhance risk management in complex markets.